What happened to the Soviet Venera probes sent to Venus?

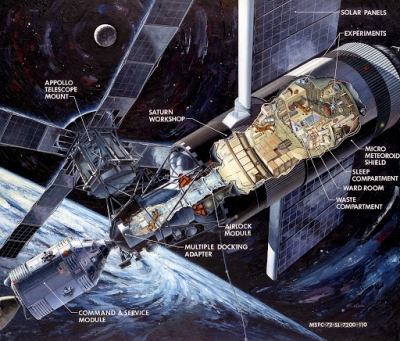

When we speak about the space race between the U.S. and the Soviet Union that took place in the second half of the 20th Century, we often focus on the moon missions. There were, however, various other missions during this time that had many different objectives as well. One of these was the Venera programme that corresponded to a series of probes developed by the Soviet Union – to better understand our neighbouring planet Venus – between 1961 and 1984.

Launched in 1961, Venera 1 lost radio contact before it flew by Venus. Venera 2 failed to send back any important data, but it did fly by Venus at a distance of 24,000 km in February 1966. Venera 3 too lost communication before atmospheric entry, but it did become the first human-made object to land on another planet on March 1, 1966.

With the planned mission including landing on the Venusian surface and studying the temperature, pressure and composition of the Venusian atmosphere, Venera 3 carried a landing capsule that was 0.9 m in diameter and weighed 310 kg. The atmosphere was to be studied during the descent by parachute.

Positive start

Venera 3 was launched on November 16, 1965, just four days after the successful launch of Venera 2. Things went fine for Venera 3 as ground controllers were able to successfully perform a mid-course correction on December 26, 1965 during the outbound trajectory and also conducted multiple communication sessions to receive valuable information.

Among these were data obtained from a modulation charged particle trap. For nearly 50 days from the date of launching, Venera 3 was thus able to give an insight into the energy spectra of solar wind ion streams, out and beyond the magnetosphere of our Earth.

A failure and a first

Just before Venera 3 was to make its atmospheric entry in Venus, on February 16, 1966, it lost all contact with scientists on Earth. Despite the communications failure, the lander was automatically released by the spacecraft.

At 06:56:26 UT (universal time) on March 1, 1966, Venera 3’s probe crash-landed on the surface of Venus, just four minutes earlier than planned. It wasn’t in a position to relay back any information as it had lost contact, but it was the first time an object touched by humans had struck the surface of a planet other than our own.

Success follows

Investigations revealed that both Venera 2 and 3 suffered similar failures owing to overheating of several internal components and solar panels. With regard to Venera 3, its impact location was on the night side of Venus and the site was put in an area between 20 degrees north and 30 degrees south latitude and 60 degrees to 80 degrees east longitude.

Venera 3 tasted success in what was largely a failure, but it did pave the way for several more successes as well. For, Venera 4 became the first to measure the atmosphere of another planet, Venera 7 became the first to achieve soft touchdown and transmit information from another planet, and Venera 13 and 14 returned colour photos of the Venusian surface, days within each other. Venera 13, in fact, transmitted the photos on March 1, 1982, exactly 16 years after Venera 3 had landed on Venus.

Picture Credit : Google