DO ALL SCIENTISTS WORK IN LABORATORIES?

Some scientists do wear white coats and work with test tubes, but many do most of their work in the world outside. A geologist, gist, for example, may have to clamber a cliff face to obtain samples of rock. Not all scientists wear white coats and work in labs. There are a wide variety of jobs and careers that require knowledge and application of science, from research to business and from regulation to teaching.

The Business Scientist underpins excellent management and business skills with scientific knowledge, supporting evidence-led decision-making within companies and other enterprises. This type of scientist has the scientific and technical knowledge to be credible with colleagues and competitors, as well as confidence in a business environment. They are found in science and technology companies in a wide variety of roles, from R&D or marketing, and to the C-suite itself.

The Developer, or translational, Scientist uses the knowledge generated by others and transforms it into something that society can use. They might be developing products or services, ideas that change behaviour, improvements in health care and medicines, or the application of existing technology in new settings.

They are found in research environments and may be working with Entrepreneur and Business scientists to help bring their ideas to market.

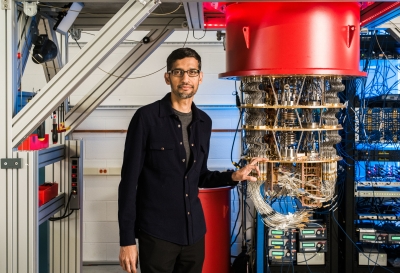

The Entrepreneur Scientist makes innovation happen. Their scientific knowledge and connections are deep enough to be able to see opportunities for innovation – not just in business, but also in the public sector and other sectors of society.

They blend their science knowledge and credibility with people management skills, entrepreneurial flair and a strong understanding of business and finance, to start their own businesses or help grow existing companies.

The Explorer Scientist is someone who, like the crew of the Enterprise, is on a journey of discovery “to boldly go where no one has gone before”. They rarely focus on a specific outcome or impact; rather they want to know the next piece of the jigsaw of scientific understanding and knowledge. They are likely to be found in a university or research centre or in Research & Development (R&D) at an organisation, and are likely to be working alone.

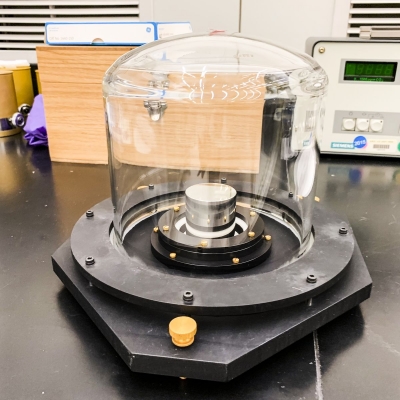

The Regulator Scientist is there to reassure the public that systems and technology are reliable and safe, through monitoring and regulation. They will have a mix of skills and while they may not get involved in things like lab work, they will have a thorough understanding of the science and the processes involved in monitoring its use or application. They are found in regulatory bodies, such as the Food Standards Agency, and in a wide range of testing and measurement services.

The Technician Scientist provides operational scientific services in a wide range of ways. These are the scientists we have come to depend on within the health service, forensic science, food science, health and safety, materials analysis and testing, education and many other areas. Rarely visible, this type of scientist is found in laboratories and other support service environments across a wide variety of sectors.

The Investigator Scientist digs into the unknown observing, mapping, understanding and piecing together in-depth knowledge and data, setting out the landscape for others to translate and develop. They are likely to be found in a university or research centre or in Research & Development (R&D) at an organisation, working in a team and likely in a multi-disciplinary environment.